If you’ve ever wondered how machine learning can be used to combine the sound of something like a flute and a snare, you have probably come across Magenta, an ongoing endeavour within Google that explores how machine learning can create art and music in new ways.

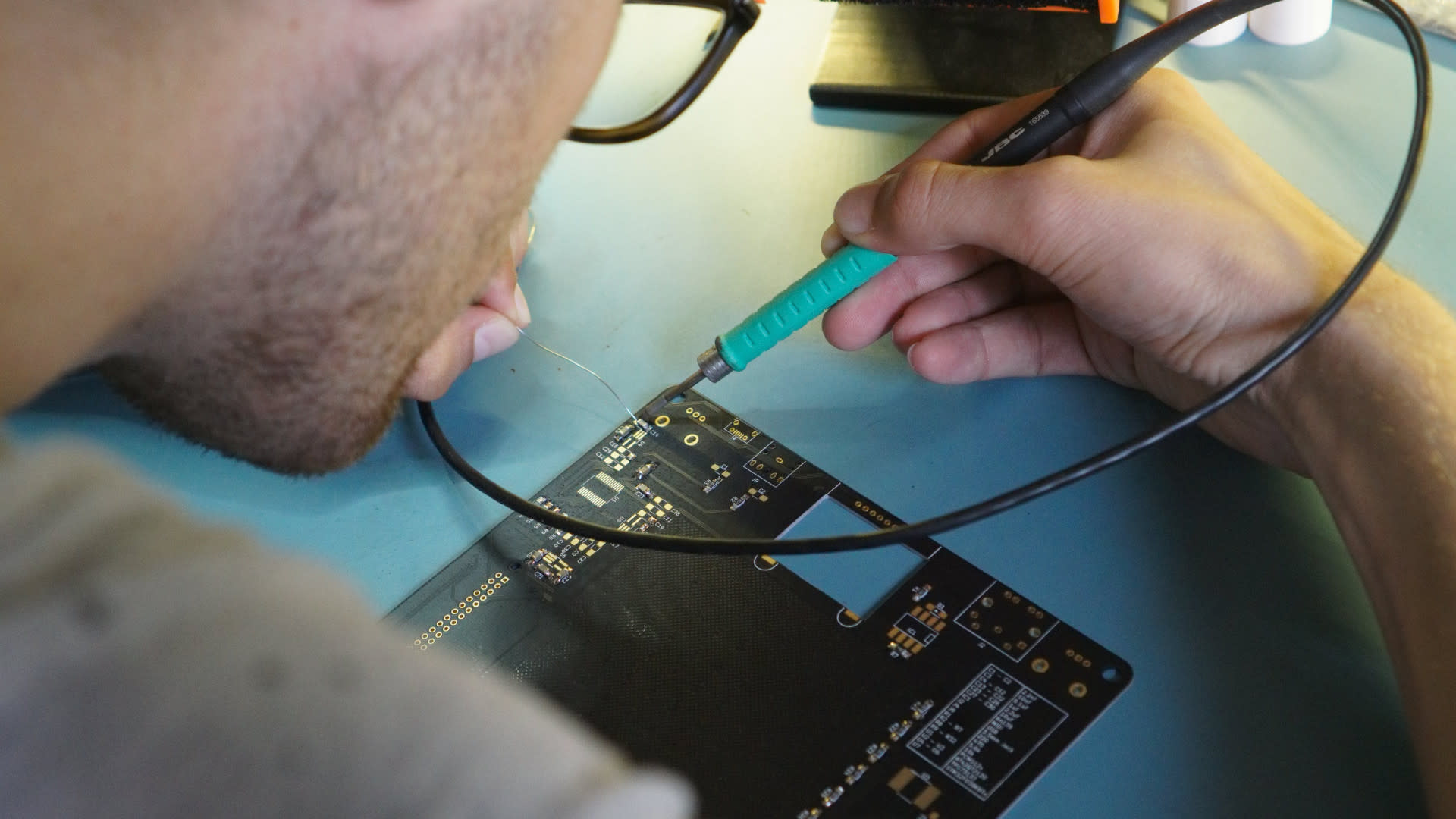

NSynth (Neural Synthesizer ) is a machine learning algorithm by Magenta that, rather than blending and layering (multiple) sounds, synthesizes an entirely new sound using the acoustic qualities of the input sounds. Together with Google Creative Lab, they developed a physical interface to experiment with sounds that are generated by the algorithm that they dubbed NSynth Super. The best part is that Google released all source files (see: GitHub) to allow music and technology enthusiasts like me to build their own. So that’s what I did.

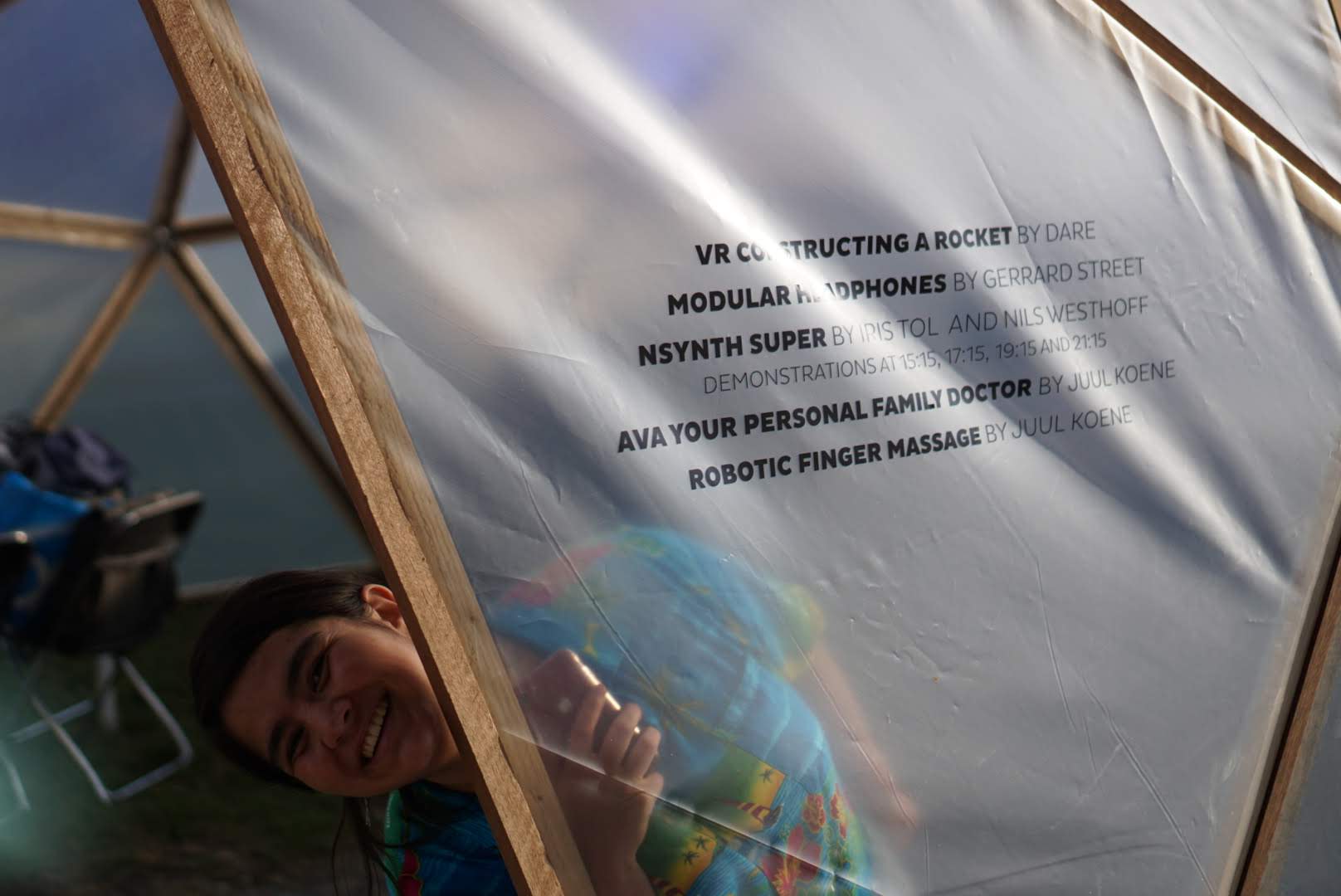

Together with Iris Tol, I have built an NSynth Super that was displayed at International Festival of Technology, from 6 to 8 june, 2018 in Delft.

While the entire setup is still a work in progress, my first time improvising on a live setup using a Traktor F1 which is MIDI synced to Ableton Live, that I created a patch for to play the NSynth Super with a USB MIDI Keyboard looked a little bit like this.

There’s still so much to explore with the NSynth algorithm. I’ll be playing with different ways to implement the NSynth Super in a live environment. I’ve previously set up the audio pipeline to generate my own sounds using the algorithm, so I’ll be collecting source sounds over the coming weeks as well. Keep an eye out for updates because this is only the beginning.

If you haven’t yet, you should probably watch Google’s video about the NSynth Super too.